Some developers are vibe-coding whole applications (if you believe them). Others are using AI coding assistants for a more intelligent autocomplete, to generate or edit some snippets, or just asking questions about code or other topics. And yet another group of software engineers are totally against AI coding assistants and rarely use them. Anyway, having your code reviewed by AI is usually not a bad idea. And gamifying that approach could motivate all of the above types.

The prompt

The goal for our review is to have an output considering different factors. Each factor should be rated from 1 to 10 in 0.5 increments. There should then be also actionable suggestion on how to improve the code. I have tried various variants and ended up with the following:

Assess the provided code based on the following criteria using a 1-10 scale:

Potential Bugs (1=high risk, 10=very low risk)

How likely is this code to introduce bugs or errors?

Tests (1=no tests, 10=comprehensive coverage)

Are there sufficient tests? Is the testing strategy robust?

Clarity (1=very unclear, 10=crystal clear)

How easy is it to understand the code's intent, logic, and structure through reading?

Consistency (1=very inconsistent, 10=fitting in perfectly)

How consistent is the added code. Are there new patterns in naming, design, style or is it very consistent with existing code?

Testability (1=very difficult to test, 10=highly testable)

Can the code be easily tested in isolation?

Design (1=poorly designed, 10=well-designed)

How well-architected is the code in terms of organization and modularity?

Security (1=high risk, 10=very low risk)

Are there potential security vulnerabilities?

Average Score: Calculate the average of the six criteria.

List the scores per category in a table with the average in the last row (always in the form of x/10). Provide the 2-5 most urgent suggestions to improve in a compact form. Ask the developer if more information about improvements are needed. You need to rate consistently throughout.This delivered for me the best results. I wanted to have a brief summary with only a few suggestions in the beginning. This would allow me to revisit the mentioned code myself and ask for more details if needed. Of course you could even ask AI to do the improvements itself.

Review some bad code

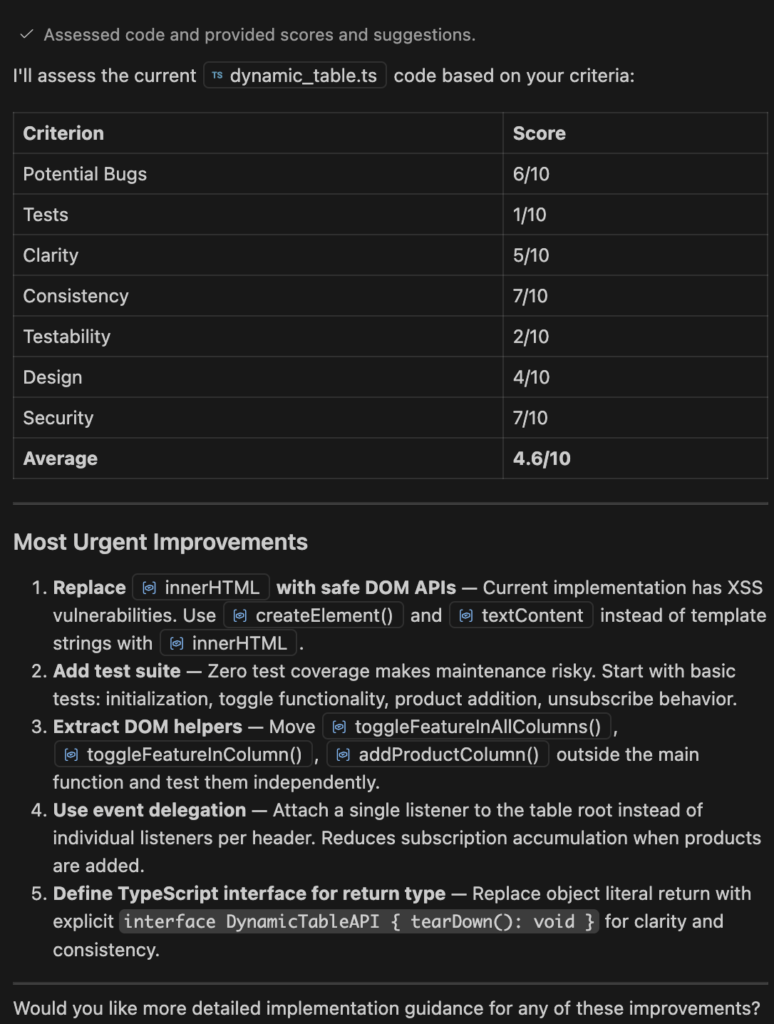

I have tried the above prompt on some code and got the following compact response:

So here we have a lot of potential to improve.

Scoring well

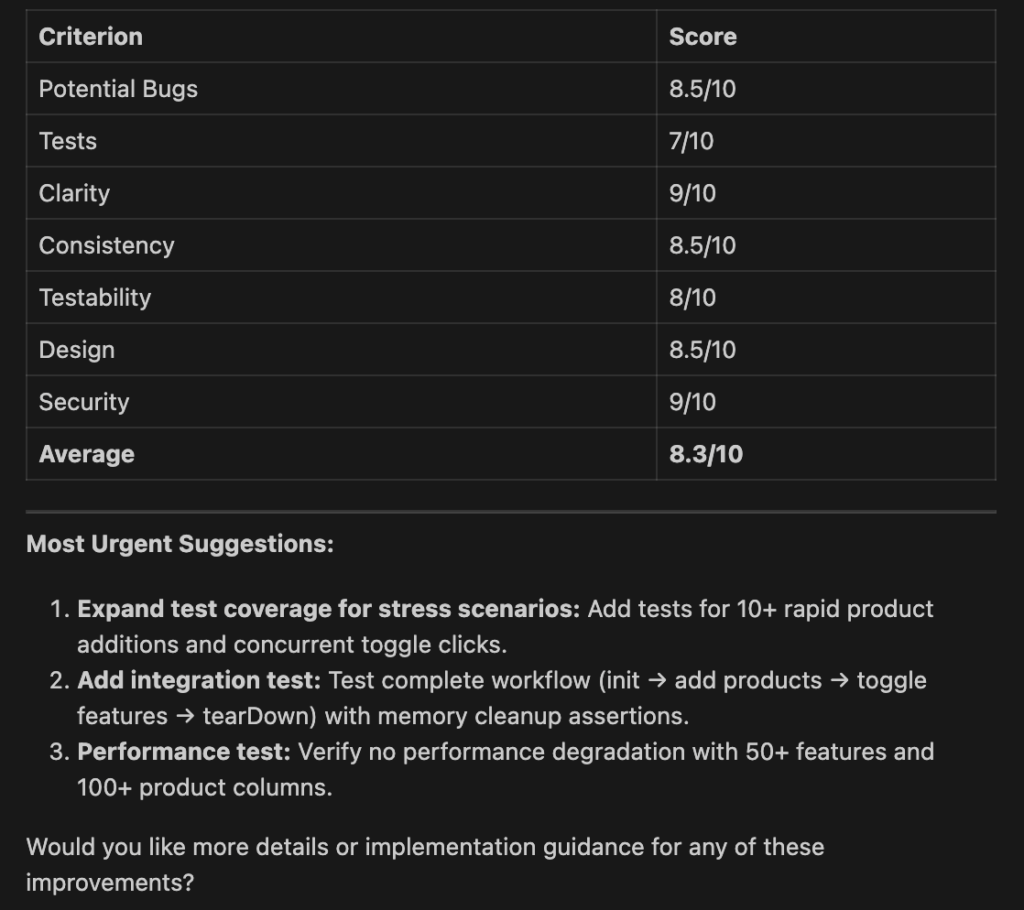

After some improvements I can run the prompt again and I score now 8.3 on average, compared to the 4.6 before:

Is the code now very good and I don’t need a human review at all? Definitely not! Even you should review again if the changes were done by AI. But once pushed to the remote your peers will probably be more happy about the Merge Request that should now look more pleasant than before.

Make it reusable

Finally we would like to have the prompt always ready. That is why you can create a .github/copilot/prompts folder with a prompt file codeReviewAssessment.prompt.md. The file is started with the following block and contains the prompt below that:

---

name: codeReviewAssessment

description: Evaluate code quality across seven dimensions with consistent scoring and actionable feedback.

argument-hint: The selected code or codebase to review

---This enables us to access the prompt by typing /codeReviewAssessment.